Monad: Bringing Back The EVM

Introduction

Monad is a high-performance, optimized EVM-compatible L1, with 10,000 TPS (1 billion gas per second), with 500ms block frequency and 1 second finality. The chain was built from the ground up to address some issues that the EVM faces, more specifically:

The EVM processes transactions sequentially, leading to bottlenecks during high network activity, which leads to slower transaction times, especially during network congestion.

Low throughput, at 12-15 TPS and long block times, at 12 seconds.

EVM requires gas fees for every transaction, which can fluctuate significantly, especially during high network demand, gas fees can become prohibitively expensive.

Why Scale The EVM

Monad offers full EVM bytecode and Ethereum RPC API compatibility, enabling integration for both developers and users without requiring changes to their existing workflows.

A common question is why scale the EVM when more performant alternatives like the SVM exist. The SVM offers faster block times, cheaper fees, and higher throughput compared to most EVM implementations. Nevertheless, the EVM comes with a few key benefits, which stem from two primary factors: the substantial capital within the EVM ecosystem and extensive developer resources.

Capital Base

The EVM commands significant capital, with Ethereum holding nearly $52B in TVL compared to Solana's $7B. L2s such as Arbitrum and Base each hold approximately $2.5-3B in TVL. Monad, and other EVM-compatible chains, can benefit from the large capital base on EVM chains through both canonical and third-party bridges that can integrate with minimal friction. This substantial EVM capital base remains relatively active and can attract both users and developers as:

Users gravitate toward liquidity and high volumes

Developers seek high volumes, fees, and application visibility

Developer Resources

Ethereum's tooling and applied cryptography research integrate directly into Monad while gaining improved throughput and scale through:

Applications

Developer tooling (Hardhat, Apeworx, Foundry)

Wallets (Rabby, Metamask, Phantom)

Analytics/indexing (Etherscan, Dune)

Electric Capital's developer report shows 2,788 full-time Ethereum developers as of July 2024, followed by Base with 889 and Polygon with 834. Solana ranked seventh with 664 developers, behind Polkadot, Arbitrum, and Cosmos. While some argue crypto's overall developer numbers remain small and therefore should be largely ignored (and that resources should be focused on bringing in external talent), it is clear that significant EVM talent exists within the "small" pool of crypto developers. Furthermore, given that most talent works in EVM, and most tooling is in EVM, it's likely that new developers will have to or choose to learn and develop in the EVM. As Keone mentions in an interview, developers can choose to:

Build portably for the EVM, enabling multi-chain deployment with limited throughput and higher fees

Build for performance and lower costs within specific ecosystems like Solana, Aptos, or Sui

Monad aims to combine both of these approaches. With the majority of tooling and resources tailored for the EVM, applications developed within its ecosystem can be seamlessly ported. Combined with its relative performance and efficiency—bolstered by Monad's optimizations—it becomes evident that the EVM serves as a strong competitive moat.

Apart from developers, users also prefer familiar workflows. EVM workflows have become standard through tools like Rabby, MetaMask and Etherscan. These established platforms facilitate easier bridge and protocol integration. Furthermore, fundamental applications (AMMs, money markets, bridges) can launch immediately. These basic primitives are essential for chain sustainability alongside novel applications.

Scaling the EVM

Two major approaches exist to scale the EVM:

Move execution offchain: offboard execution to other virtual machines through rollups, adopting a modular architecture.

Improve performance: improving base chain EVM performance through consensus optimization and increased block sizes and gas limits.

Rollups and Modular Architecture

Vitalik introduced rollups as Ethereum's primary scaling solution in October 2020, aligning with modular blockchain principles. Therefore, Ethereum's scaling roadmap delegates execution to rollups, which are offchain virtual machines leveraging Ethereum's security. Rollups excel at execution with higher throughput, lower latency, and reduced transaction costs. Their iterative development cycles are shorter than Ethereum's - updates requiring years on Ethereum might take months on rollups due to lower potential costs and losses.

Rollups can operate with centralized sequencers while maintaining security escape hatches, helping them bypass certain decentralization requirements. It’s important to note that many rollups (including Arbitrum, Base and OP Mainnet) are still in stage 0 or stage 1. In a stage 1 rollup, fraud proof submission is restricted to whitelisted actors, and upgrades that aren't related to onchain provable bugs must provide users at least a 30-day window to exit. Stage 0 rollups provide less than 7 days for users to exit if the permissioned operator is down or censoring.

In Ethereum, a typical transaction size is 156 bytes, with the signatures containing the highest amount of data. Rollups enable the bundling of multiple transactions together, reducing the overall transaction size and optimizing gas costs. Simply put, rollups achieve efficiency by bundling multiple transactions into batches for Ethereum mainnet submission. This reduces onchain data processing but increases ecosystem complexity as new infrastructure requirements emerge for rollup connectivity. Additionally, rollups themselves have adopted modular architectures, moving execution to L3s to address base rollup throughput limitations, particularly for gaming applications.

While rollups theoretically eliminate bridging and liquidity fragmentation by becoming comprehensive chains atop Ethereum, current implementations have not yet become fully “comprehensive” chains. The three largest rollups (by TVL) - Arbitrum, OP Mainnet, and Base - maintain distinct ecosystems and user demographics, with each having various niches they excel at, but failing to offer an all encompassing solution.

Simply put, a user would have to go to multiple different chains to achieve the same experience they would if they were to just use a monolithic chain such as Solana. A unified shared state (one of the core propositions of blockchains) is not present in the Ethereum ecosystem, greatly limiting onchain use-cases - particularly due to the fragmentation of liquidity and state, whereby competing rollups can’t easily know the state of each other. State fragmentation also comes with the additional need for bridges and cross-chain messaging protocols, which can link rollups and states together but come with a number of tradeoffs associated with this. Monolithic blockchains do not face these fragmentation issues as there is a single ledger recording state.

Each rollup has taken a different approach in optimizing and focusing on their specific niches. Optimism introduced additional modularity through Superchain, therefore relying on other L2s building using its stack and charging to compete. Arbitrum focuses on DeFi primarily, particularly perpetuals and options exchanges, while expanding into L3s with Xai and Sanko. New high-performance rollups like MegaETH and Base have emerged with increased throughput capabilities who aim to offer a single, large chain offering. MegaETH has not yet launched, and Base has been impressive in their implementation, but continues to lack in certain sectors, including derivatives trading (both options and perpetuals) and DePIN.

Early L2 Scaling

Optimism and Arbitrum

First-generation L2s provide improved execution compared to Ethereum but lag newer, hyperoptimized solutions. For example, Arbitrum processes 37.5 transactions per second (“TPS”), Optimism Mainnet 11 TPS. For comparison, Base has around 80 TPS, MegaETH targets 100,000 TPS, BNB Chain offers 65.1 TPS, and Monad targets 10,000 TPS.

While Arbitrum and Optimism Mainnet cannot support extremely high-throughput applications like fully onchain orderbooks, they scale through additional chain layers - L3s for Arbitrum and superchains for Optimism - as well as centralized sequencers.

Arbitrum's gaming-focused L3s, Xai and Proof of Play, demonstrate this approach. Built on the Arbitrum Orbit stack, they settle on Arbitrum using AnyTrust data availability. Xai achieves 67.5 TPS, while Proof of Play Apex reaches 12.2 TPS and Proof of Play Boss 10 TPS. These L3s introduce additional trust assumptions through Arbitrum settlement rather than Ethereum Mainnet, while facing potential challenges with less decentralized data availability layers. Optimism's L2s - Base, Blast, and upcoming Unichain - maintain stronger security through Ethereum settlement and blob data availability.

Both networks prioritize horizontal scaling. Optimism provides La2 infrastructure through OP Stack, chain deployment support, and shared bridging with interoperability features. Arbitrum offboards specific use cases to L3s, particularly gaming applications where additional trust assumptions pose lower capital risks than finance applications.

Improving Chain and EVM Performance

Alternative scaling approaches focus on execution optimization or targeted trade-offs to increase throughput and TPS through vertical rather than horizontal scaling. Base, MegaETH, Avalanche, and BNB Chain exemplify this strategy.

Base

Base announced plans to reach 1 Ggas/s through gradual gas target increases. In September, they raised the target to 11 Mgas/s and increased the gas limit to 33 Mgas. Initial blocks processed 258 transactions, maintaining approximately 70 TPS for five hours. By December 18th, the gas target reached 20 Mgas/s with 2-second block times, enabling 40M gas per block. In comparison Arbitrum has 7 Mgas/s, and OP Mainnet has 2.5 MGas/s.

Base has emerged as a competitor to Solana and other high throughput chains. As of today, Base has surpassed other L2s in terms of activity, as seen by:

As of January 2025, their monthly fees reached $15.6 million—7.5 times higher than Arbitrum’s and 23 times higher than OP Mainnet’s.

As of January 2025, their transaction counts reached a cumulative 329.7M, 6 times higher than that of Arbitrum (57.9M) and 14 times higher than that of OP Mainnet (24.5M). Note: transaction counts can be gamed and can be misleading.

The Base team has focused on delivering a more unified experience by optimizing for speed, throughput and low fees, as opposed to Arbitrum and Optimism who are focusing on a more modular approach. Users have shown that they prefer a more unified experience, as seen by the activity and revenue numbers of Base. In addition, Coinbase’s backing and distribution has been helpful.

MegaETH

MegaETH is an EVM-compatible L2. At its core, it processes transactions through a hybrid architecture that uses specialized sequencer nodes for execution.MegaETH is unique in its architectural separation of performance and security tasks, combined with a new state management system that replaces the traditional Merkle Patricia Trie to minimize disk I/O operations.

The system achieves 100,000 transactions per second with sub-millisecond latency, while maintaining full EVM compatibility and the ability to handle terabyte-scale state data. MegaETH distributes functionality across three specialized node types using EigenDA for data availability:

The Sequencer: A high-performance single node (100 cores, 1-4TB RAM) manages transaction ordering and execution, maintaining state in RAM for quick access. It generates blocks at approximately 10ms intervals, witnesses for block validation, and state diffs tracking blockchain state changes. The sequencer achieves high performance through parallel EVM execution with priority support, operating without consensus overhead during normal operation.

The Provers: These lightweight nodes (1 core, 0.5GB RAM) compute cryptographic proofs validating block contents. They validate blocks asynchronously and out-of-order, employ stateless validation, scale horizontally, and generate proofs for full node verification. The system supports both zero-knowledge and fraud proofs.

The Full Nodes: Operating on moderate hardware (4-8 cores, 16GB RAM), full nodes bridge provers, sequencers, and EigenDA. They process compressed state diffs through peer-to-peer networking, apply diffs without transaction re-execution, verify blocks using prover-generated proofs, maintain state root with optimized Merkle Patricia Trie, and support 19x compression for synchronization.

Issues with Rollups

Monad fundamentally differs from rollups and their inherent tradeoffs. Most rollups today rely on centralized single sequencers, though efforts are underway to develop shared and decentralized sequencing solutions. This centralization of sequencers and proposers introduces operational vulnerabilities. Control by a single entity can lead to liveliness issues and reduced censorship resistance. While escape hatches exist, centralized sequencers retain the ability to manipulate transaction speed or order to extract MEV. They also create single-points of failure, in which, if a sequencer fails, the entire L2 network can't function properly.

Apart from centralization risks, rollups come with additional trust assumptions and trade offs, particularly revolving around interoperability:

Users encounter multiple non-interchangeable forms of identical assets. The three largest rollups—Arbitrum, Optimism, and Base—maintain distinct ecosystems, use cases, and demographics. Users must bridge between rollups for specific applications, or protocols, must launch across multiple rollups, bootstrapping liquidity and users, while managing increased complexity and security risks from bridge integrations.

Additional interoperability issues arise from technical constraints (limited transactions per second on base L2 layers), which has led to further modularity and pushing execution to L3s, particularly for gaming. Centralization presents additional challenges.

We’ve seen optimized rollups (such as Base and MegaETH) enhance performance and optimize the EVM through a centralized sequencer, as transactions are ordered and executed without consensus requirements. This allows reduced block times and increased block sizes by utilizing a single high-capacity machine, while also creating single points of failure and potential censorship vectors.

Monad takes a distinct approach, requiring more powerful hardware compared to Ethereum Mainnet. While Ethereum L1 validators need 2 core CPUs, 4-8 GB memory, and 25 Mbps bandwidth, Monad requires 16 core CPUs, 32 GB memory, 2 TB SSDs, and 100 Mbps bandwidth. Though Monad's specifications appear substantial compared to Ethereum's, the latter has maintained minimal node requirements to accommodate solo validators, despite Monad's recommended hardware being accessible today.

Beyond hardware specifications, Monad is redesigning its software stack to achieve greater decentralization through node distribution compared to L2s. While L2s have prioritized enhanced hardware for single sequencers at the expense of decentralization, Monad has both increased hardware requirements alongside software stack modifications to boost both performance, while maintaining node distribution.

Monad’s EVM

Early Ethereum forks primarily modified consensus mechanisms, as seen with Avalanche, while maintaining the Go Ethereum client for execution. Though multiple Ethereum clients exist in various programming languages, they essentially replicate the original design. Monad differs by reconstructing both consensus and execution components from first principles and from the ground up.

Monad prioritizes maximizing hardware utilization. In contrast, Ethereum Mainnet’s emphasis on supporting solo stakers has constrained performance optimization by requiring compatibility with less robust hardware. This limitation affects block sizes, throughput, and block time improvements—ultimately, a network is only as fast as its slowest validator.

Similar to Solana's approach, Monad employs more robust hardware to increase bandwidth and reduce latency. This strategy leverages all available cores, memory, and solid-state drives to enhance speed. Given the decreasing costs of powerful hardware, optimizing for high-performance equipment proves more practical than restricting capabilities for lower-quality devices.

The current Geth client processes execution sequentially through single-threading. Blocks contain linearly ordered transactions that transform the previous state into a new state. This state encompasses all accounts, smart contracts, and stored data. State changes occur when transactions are processed and validated, affecting account balances, smart contracts, token ownership, and other data.

Transactions often operate independently. The blockchain state comprises different accounts, each making transactions independently, which typically do not interact with one another. Based on this idea, Monad uses optimistic parallel execution.

Optimistic parallel execution attempts to run transactions in parallel to gain potential performance benefits - while initially assuming that there will be no conflicts. Multiple transactions are run simultaneously without initially worrying about their potential conflicts or dependencies. After execution, the system checks if the parallel transactions actually conflicted with each other, and corrects them if there are conflicts.

Protocol Mechanics

Parallel Execution

Solana’s Parallel Execution

When users think of parallel execution, they typically think of Solana and the SVM, which allows for parallel execution of transactions through access lists. Transactions on Solana include a header, account keys (addresses for instructions included in the transaction), blockhash (the hash included in the transaction when it was created), instructions, and an array of signatures for all accounts required as signers based on the transaction instructions.

Instructions per transaction include the program address, which specifies the program being invoked; accounts which list all accounts the instructions read from and write to; and instruction data, which specifies the instruction handler (functions that process instructions) as well as additional data necessary for the instruction handler.

Each instruction specifies three key details for every account involved:

The public address of the account

Whether the account needs to sign the transaction

Whether the instruction will modify the account's data

Solana uses these specified account lists to identify transaction conflicts in advance. Since all accounts are specified in the instruction, including details on whether they are writable, transactions can be processed in parallel if they do not include any accounts that write to the same state.

The process looks like this:

Solana examines the account lists provided in each transaction

It identifies which accounts will be written to

It checks for conflicts between transactions (whether they write to the same accounts)

Transactions that don't write to any of the same accounts are processed in parallel, while transactions that have conflicting write operations are processed sequentially

A similar mechanism exists in the EVM but is not utilized since Ethereum does not necessitate access lists. Users would need to pay more upfront because they're including this access list in the transaction. The transaction becomes larger and costs more, but users receive a discount for specifying their access list.

Monad’s Parallel Execution

In contrast to Solana, Monad uses optimistic parallel execution. Instead of identifying which transactions affect which accounts and parallelizing based on that (Solana's approach), Monad assumes that transactions can be executed in parallel without interfering with each other.

When Monad runs transactions in parallel it assumes that the transactions start from the same point. When multiple transactions are run in parallel, the chain generates pending results for each of those transactions. In this case, pending result refers to bookkeeping done by the chain to track inputs to transactions and outputs from transactions, and how they affect the state. These pending results are committed in the original order of the transactions (i.e. based on priority fees).

To commit pending results, inputs are checked to ensure that they are still valid - and if pending results inputs have changed/been modified (i.e. if transactions cannot work in parallel because they access the same accounts, and would affect each other, the transactions are instead processed sequentially (the later transaction is re-executed)). Re-execution only happens to preserve correctness. The result isn't transactions taking longer, but rather requiring more computation.

In the first iteration of execution, Monad has already checked for conflicts or dependencies on other transactions. Therefore, when the transaction executes the second time (after the first optimistic parallel execution fails), all previous transactions in the block have already been executed, ensuring the second attempt succeeds. Even if all transactions in Monad are dependent on each other, they are simply executed sequentially, producing the same outcome as another, non-parallelized EVM.

Monad tracks each transaction's read set and write set during execution and then merging results in the original transaction order. If a transaction that ran in parallel used data that turned out to be stale (because an earlier transaction updated something it read), Monad detects this when merging and re-executes that transaction using the correct updated state. This ensures the final outcome is identical to a sequential execution of the block, preserving Ethereum-compatible semantics. The overhead of such re-execution is minimal – expensive steps like signature verification or data loading need not be repeated from scratch, and often the required state is already cached in memory from the first run.

Example:

In the initial state, user A has 100 USDC, user B has 0 USDC and user C has 300 USDC.

There are two transactions:

Transaction 1: User A sends User B 10 USDC

Transaction 2: User A sends User C 10 USDC

Serial Execution Process

With serial execution, the process is simpler, but less efficient. Each transaction is executed serially:

User A first sends User B 10 USDC.

Afterwards User A sends User C 10 USDC.

At end state, for serial execution (non-Monad):

User A has 80 USDC left (sent 10 USDC to B and C each).

User B has 10 USDC.

User C has 310 USDC.

Parallel Execution Process

With parallel execution, the process is more complex, but more efficient. Multiple transactions process simultaneously rather than waiting for each to complete in sequence. While transactions run in parallel, the system tracks their inputs and outputs. During a sequential "merge" phase, if it detects that a transaction used an input that was changed by an earlier transaction, that transaction is re-executed with the updated state.

Step-by-step process:

User A starts with 100 USDC, User B starts with 0 USDC, and User C starts with 300 USDC.

With optimistic parallel execution, multiple transactions run simultaneously, while initially assuming that they're all working from the same starting state.

In this case, Transaction 1 and Transaction 2 execute in parallel. Both transactions read the initial state where User A has 100 USDC.

Transaction 1 plans to send 10 USDC from User A to User B, reducing User A's balance to 90 and increasing User B's to 10.

At the same time, Transaction 2 also reads User A's initial balance of 100 and plans to transfer 10 USDC to User C, attempting to reduce User A's balance to 90 and increase User C's to 310.

When the chain validates these transactions sequentially, Transaction 1 is checked first. Since its input values match the initial state, it's committed, and User A's balance becomes 90 while User B receives 10 USDC.

When the chain checks Transaction 2, it discovers an issue: Transaction 2 was planned assuming User A had 100 USDC, but User A now only has 90 USDC. Due to this mismatch, Transaction 2 must be re-executed.

During re-execution, Transaction 2 reads the updated state where User A has 90 USDC. It then successfully transfers 10 USDC from User A to User C, leaving User A with 80 USDC and increasing User C's balance to 310 USDC.

In this case, both transactions were able to complete successfully since User A had sufficient funds for both transfers.

At end state, for parallel execution (Monad):

The outcome is the same:

User A has 80 USDC left (sent 10 USDC to B and C each).

User B has 10 USDC.

User C has 310 USDC.

Deferred Execution

When blockchains verify and agree on transactions, nodes across the globe must communicate with each other. This global communication encounters physical limitations, as data requires time to travel between distant points like Tokyo and New York.

Most blockchains use a sequential approach where execution is tightly coupled with consensus. In these systems, execution is a prerequisite for consensus - nodes must execute transactions before finalizing blocks.

Here’s a simplified breakdown:

Execution precedes consensus for block finalization before beginning the next block. Nodes reaching consensus first agree on transaction ordering, then on the merkle root summarizing the state after executing transactions. Leaders must execute all transactions in proposed blocks before sharing them, and validating nodes must execute each transaction before reaching consensus. This process constrains execution time since it occurs twice while allowing sufficient time for multiple rounds of global communication for consensus. For example, Ethereum has 12-second block times, while the actual execution occurs in potentially 100 milliseconds (actual execution time varies significantly based on block complexity and gas usage).

Some systems attempt to optimize this through interleaved execution, which divides tasks into smaller segments that alternate between processes. While processing still occurs sequentially with single-task execution at any moment, rapid switching creates apparent simultaneity. However, this approach still fundamentally limits throughput as execution and consensus remain interdependent.

Diagram from Martin Thoma

Monad addresses the limitations of sequential and interleaved execution by decoupling execution from consensus. Nodes achieve consensus on transaction ordering without executing them - i.e. two parallel processes occur:

Nodes execute transactions that reached consensus

Consensus proceeds for the next block, without waiting for execution to complete, with execution following consensus

This structure enables the system to commit to substantial work through consensus before execution begins, allowing Monad to process larger blocks with more transactions by allocating additional time for execution. Additionally, it enables each process to use the full block time independently - consensus can use the entire block time for global communication, execution can use the entire block time for computation, and neither process blocks the other.

To maintain security and state consistency while decoupling execution from consensus, Monad uses delayed merkle roots where each block includes a state merkle root from N blocks ago (N is expected to be 10 at launch, in the current testnet, it is set to 3), allowing nodes to verify they reached the same state after execution. The delayed merkle roots allow the chain to verify state consistency: the delayed merkle root acts as a checkpoint - after N blocks, nodes must prove they arrived at the same state root, otherwise they've executed something incorrectly. Additionally, if a node's execution produces a different state root, it will detect this N blocks later and can rollback and re-execute to reach consensus. This helps eliminate risks of malicious behavior from nodes. Produced delayed merkle roots can be used to verify state by light clients - albeit at an N block delay.

Since execution is deferred and happens after consensus, a potential problem is for a malicious actor (or a typical user accidentally) to keep submitting transactions that will eventually fail due to insufficient funds. For example, if a user with a total balance of 10 MON submitted 5 transactions, where each transaction individually tries to send 10 MON, it could lead to issues. However, without checking, these transactions could all get through consensus only to fail during execution. To address this and reduce potential spamming, nodes implement a safeguard during consensus by tracking in-flight transactions.

For each account, nodes examine the account's balance from N blocks ago (as that's the latest verified, correct state). Then, for each pending transaction from that account that's "in flight" (approved by consensus but not yet executed), they subtract both the value being transferred (such as if sending 1 MON) and the maximum possible gas cost, calculated as gas_limit multiplied by maxFeePerGas.

This process creates a running "available balance" that's used to validate new transactions during consensus. If a new transaction's value plus maximum gas cost would exceed this available balance, it's rejected during consensus rather than letting it through only to fail during execution.

Because Monad's consensus proceeds with a slightly delayed view of the state (due to decoupling execution), it implements a safeguard to prevent including transactions that the sender cannot ultimately pay for. In Monad, each account has an available or "reserve" balance tracked during consensus. As transactions are added to a proposed block, the protocol deducts from this available balance the transaction's maximum possible cost (gas * max fee + value transferred). If an account's available balance would drop below zero, further transactions from that account are not included in the block.

This mechanism (sometimes described as charging a carriage cost to a reserve balance) ensures that only transactions that can be paid for are proposed, thereby defending against a DoS attack where an attacker with 0 funds tries to flood the network with unusable transactions. Once the block is finalized and executed, the balances are reconciled accordingly, but during the consensus phase Monad nodes always have an up-to-date check on spendable balance for pending transactions.

MonadBFT

Consensus

HotStuff

MonadBFT is a low-latency, high-throughput Byzantine Fault Tolerant ("BFT") consensus mechanism derived from HotStuff consensus.

Hotstuff was created by VMresearch and further enhanced by LibraBFT from Meta’s former blockchain team. It achieved both linear view change and responsiveness, meaning it can efficiently rotate leaders while progressing at actual network speed rather than predetermined timeouts. HotStuff also uses threshold signatures for efficiency, and implements pipelined operation allowing new blocks to be proposed before previous ones are committed.

However, these benefits come with certain tradeoffs: an additional round compared to classic two-round BFT protocols leads to higher latency and the possibility of forks during pipelining. Despite these tradeoffs, HotStuff's design enables better scaling, making it particularly suitable for large-scale blockchain implementations, even though it results in slower finality than two-round BFT protocols.

Below is a simplified breakdown of HotStuff:

When transactions occur they are sent to one of the network’s validators, known as the leader.

The leader compiles these transactions into a block and broadcasts it to the other validators in the network.

The validators then verify the block by casting their votes, which are sent to the leader of the next block.

To safeguard against malicious actors or communication failures, the block must undergo multiple rounds of voting before the state is finalized.

Depending on the specific implementation, the block is only committed after successfully passing through two or three consecutive rounds, ensuring consensus is robust and secure.

Although MonadBFT is derived from HotStuff, it introduces unique modifications and new concepts that can be explored further.

Transaction Agreement

MonadBFT is specifically designed to achieve transaction agreement under partially synchronous conditions - meaning that the chain can come to consensus even during asynchronous periods when messages are delayed unpredictably.

Eventually, the network stabilizes and delivers messages (within a known time bound). These periods of asynchronicity arise from Monad's architecture, as the chain has to implement certain mechanisms for increased speed, throughput and parallelized execution.

2-Round System

In contrast to HotStuff, which initially implemented a 3-round system, MonadBFT uses a 2-round system similar to protocols like Jolteon, DiemBFT, and Fast HotStuff.

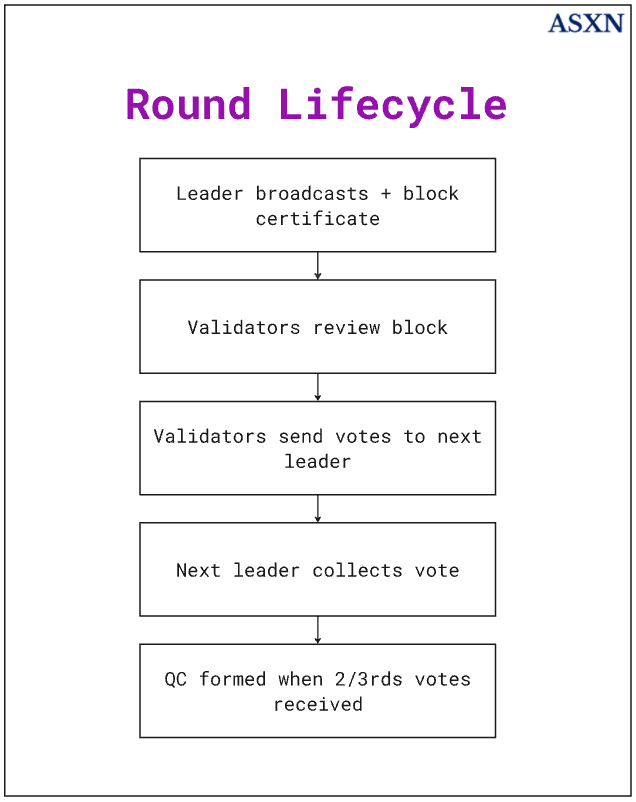

A round consists of the following basic steps:

1. In each round, the leader broadcasts a new block and a certificate (quorum certificate or timeout certificate) from the previous round.

2. Each validator reviews the block and sends signed votes to next round's leader

3. When enough votes are collected (2/3rds), a quorum certificate is formed. A quorum certificate (“QC”) signals that the network’s validators have reached consensus to append the block, while a timeout certificate (“TC”) indicates that the consensus round failed and needs to be restarted.

The "2-round" specifically refers to the commitment rule. To commit a block in a 2-round system:

Round 1: Initial block is proposed and gets a QC

Round 2: Next block is proposed and gets a QC If these two rounds complete consecutively, the first block can be committed.

DiemBFT used to use a 3-round system, but upgraded to a 2-round system instead. The 2-round system achieves faster commitment by requiring fewer rounds of communication. It allows for lower latency, as transactions can be committed more quickly as they do not need to wait for additional confirmation.

Step-By-Step Process

The consensus process in MonadBFT works as follows:

Leader Operation & Block Proposal: The process begins when a designated leader for the current round initiates consensus. The leader creates and broadcasts a new block containing user transactions, along with proof of the previous round's consensus in the form of either a QC or TC. This creates a pipelined structure where each block proposal carries forward the certification of previous blocks.

Validator Actions: Once validators receive the leader's block proposal, they begin their verification process. Each validator carefully reviews the block for validity according to protocol rules. Valid blocks receive a signed YES vote sent to the next round's leader. However, if a validator doesn't receive a valid block within the expected timeframe, they initiate the timeout procedure by broadcasting a signed timeout message including their highest known QC. This dual-path approach ensures the protocol can make progress even when block proposals fail.

Certificate Creation: The protocol uses two types of certificates to track consensus progress. A QC is created when the leader collects YES votes from two-thirds of validators, proving widespread agreement on a block. Alternatively, if two-thirds of validators timeout without receiving a valid proposal, they create a TC which allows the protocol to safely advance to the next round. Both certificate types serve as critical proof of validator participation.

Block Finalization (2-chain commit rule): MonadBFT uses a two-chain commit rule for block finalization. When a validator observes two adjacent certified blocks from consecutive rounds forming a chain B ← QC ← B' ← QC', they can safely commit block B and all its ancestors. This two-chain approach provides both safety and liveness while maintaining performance.

Local Mempool Architecture

Monad adopts a local mempool architecture instead of a traditional global mempool. In most blockchains, pending transactions are gossiped to all nodes, which can be slow (many network hops) and bandwidth-heavy due to redundant transmissions. By contrast, in Monad each validator maintains its own mempool; transactions are forwarded by RPC nodes directly to the next few scheduled leaders (currently the next N = 3 leaders) for inclusion.

This leverages the known leader schedule (avoiding unnecessary gossip to non-leaders) and ensures new transactions reach block proposers quickly. Upcoming leaders perform validation checks and add the transactions to their local mempools, so when a validator’s turn to lead arrives, it already has relevant transactions queued. This design reduces propagation latency and saves bandwidth, enabling higher throughput.

RaptorCast

Monad uses a specialized multicast protocol called RaptorCast to rapidly propagate blocks from the leader to all validators. Instead of sending the full block serially to each peer or relying on simple gossip, the leader breaks the block proposal data into encoded chunks using an erasure-coding scheme (per RFC 5053) and distributes these chunks efficiently through a two-level tree of relays. In practice, the leader sends different chunks to a set of first-tier validator nodes, who then forward chunks to others, such that every validator eventually receives enough chunks to reconstruct the full block. The assignment of chunks is weighted by stake (each validator is responsible for forwarding a portion of chunks) to ensure load is balanced. This way, the entire network's upload capacity is utilized to disseminate blocks quickly, minimizing latency while still tolerating Byzantine (malicious or faulty) nodes who might drop messages. RaptorCast allows Monad to achieve fast, reliable block broadcasting even with large blocks, which is critical for high throughput.

BLS and ECDSA Signatures

Quorum certificates and timeout certificates are implemented using BLS and ECDSA signatures, which are two different types of digital signature schemes used in cryptography.

Monad uses a combination of BLS signatures and ECDSA signatures for security and scalability. BLS signatures enable signature aggregation, while ECDSA signatures are generally faster to verify.

ECDSA Signatures

While not able to aggregate signatures, ECDSA signatures are faster. Monad uses them for QCs and TCs.

QC Creation:

1. Leader proposes a block

2. Validators vote by signing if they agree

3. When desired portion of votes are collected, they can be combined into a QC

4. QC proves validators agreed on that block

TC Creation:

1. If validator doesn't receive a valid block within predetermined time

2. It broadcasts a signed timeout message to peers

3. If enough timeout messages collected, they form a TC

4. TC allows moving to next round even though current round failed

BLS Signatures

Monad uses BLS signatures for multi-signatures, as it allows signatures to be incrementally aggregated into a single signature. This is used primarily for aggregatable message types, such as votes and timeouts.

Votes are messages sent by validators when they agree with a proposed block. They contain a signature indicating approval of the block, and are used to build QCs.

Timeouts are messages sent when a validator hasn't received a valid block within expected time. They contain a signed message with the current round number, the validator's highest QC and a signature on these values. They are used to build TCs.

Both votes and timeouts can be combined/aggregated using BLS signatures to save space and improve efficiency. As mentioned, BLS is comparatively slower than ECDSA signatures.

Monad uses a combination of ECDSA and BLS to benefit off of the efficiencies of both. Although the BLS scheme is slower, it allows for aggregation of signatures and is therefore particularly useful for votes and timeouts, while the ECDSA is faster but does not allow for aggregation.

Monad MEV

We highly recommend reading over all resources that you can find put out by or involving Thogard to learn more about MEV on Monad. We also recommend you check out aPriori’s two blog posts MEV Landscape in the Parallel Execution Era and Unlocking Monad’s Potential to learn more about MEV on Monad.

As a short background, MEV refers to value which can be extracted by parties through reordering, including or excluding transactions within a block. MEV is often classified as “good” MEV, i.e. MEV which keeps markets healthy and efficient, (e.g. liquidations, arbitrage) or “bad” MEV (e.g. sandwiching).

Monad's deferred execution affects the way that MEV works on the chain. On Ethereum, execution is a prerequisite to consensus - meaning that when nodes agree on a block they are agreeing on both the transaction list and ordering, as well as the resulting state. Before proposing a new block, leaders must execute all transactions and compute the final state, allowing searchers and block builders to reliably simulate transactions against the latest confirmed state.

In contrast, on Monad consensus and execution are decoupled. Nodes only need to agree on the transaction ordering for the most recent block, while consensus on the state may come later. This means that validators may be working with state data based on an earlier block, which makes it impossible for them to simulate against the latest block. In addition to complications arising from lack of confirmed state information, Monad's 1-second block times could be challenging for builders to simulate blocks to optimize the built block.

Access to the latest state data is necessary for searchers as it provides them with confirmed asset prices on DEXs, liquidity pool balances and smart contract states etc. - which enables them to identify potential arbitrage opportunities and spot liquidation events. If the latest state data is not confirmed, searchers cannot simulate against the block before the next one is produced and face the risk of transaction reverts before the state is confirmed.

Given that there exists a lag in Monad blocks, the MEV landscape may be similar to that of Solana.

For context, on Solana, blocks are produced every ~400ms in slots, but the time between a block being produced and becoming "rooted" (finalized) is longer - typically 2000-4000ms. This delay isn't from block production itself, but rather from the time needed to collect enough stake-weighted votes from validators for the block to become finalized.

During this voting period, the network continues to process new blocks in parallel. Since transaction fees are very low and new blocks can be processed in parallel it creates a "race condition" where searchers spam transactions hoping to get included - which leads to many transactions being reverted. For example, over the month of December, 1.3B out of 3.16B of non-vote transactions (approximately 41%) were reverted on Solana. Jito's Buffalu highlighted back in 2023 that "98% of arbitrage transactions are failing on Solana".

As a similar block lag effect exists on Monad, where the confirmed state information of the latest block doesn't exist, and new blocks are processed in parallel, searchers may be incentivized to spam transactions - which may fail as transactions are reverted and the confirmed state differs from those they used to simulate.

MonadDB

Monad chose to build a custom database, called MonadDB, for storing and accessing blockchain data. One of the common concerns with chain scalability is state growth - which refers to data size exceeding the capacity of nodes. Paradigm published a short research piece on state growth back in April, where they highlighted the difference between state growth, history growth and state access, which they argue that most typically bundle under "state growth", despite being distinct concepts that each affect node hardware performance.

As they outlined:

State growth refers to the accumulation of new accounts (account balances and nonces) and contracts (contract bytecode and storage). Nodes need to have sufficient storage size and memory size to be able to accommodate for state growth.

History growth refers to the accumulation of new blocks and new transactions. Nodes need to have sufficient bandwidth to share block data and they need to have enough storage to store the block data.

State access refers to the read and write operations used to build and validate blocks.

As previously mentioned, both state growth and history growth affect chain scalability since data size may exceed the capacity of nodes. Nodes need to store data in permanent storage to build, validate and distribute blocks. In addition, nodes must cache in memory to synchronise with the chain. Both state growth and history growth, along with optimized state access, need to be accommodated by the chain, as not doing so limits the block sizes and operations per block. The more data there is in a block and the more read and write operations per block, the larger the history growth and state growth become, and the greater the need for efficient state access.

Despite state and history growth being important factors for scalability they are not the primary concern, specifically from a disk performance perspective. MonadDB focuses on managing state growth through logarithmic database scaling. Therefore, adding 16x more state only requires one additional disk access per state read. In regards to history growth, when a chain has high performance, there ends up being too much data to store locally. Other high throughput chains, like Solana, have relied on cloud hosting like Google BigTable to store historical data, which works, but comes at the cost of decentralization due to reliance on centralized parties. Monad will implement a similar solution initially, while working towards a decentralized solution eventually.

State Access

In addition to state growth and history growth, one of the key implementations of MonadDB is optimizing reading and writing operations per block (i.e. improved state access).

Ethereum uses the Merkle Patricie Trie ("MPT") to store state. The MPT borrows features from PATRICIA, a data retrieval algorithm, for more efficient retrieval of items.

Merkle Trees

A Merkle Tree ("MT") is a collection of hashes reduced to a single, root, hash, referred to as the Merkle Root. A hash of data is a fixed-size cryptographic representation of the original data. The Merkle root is created by repeatedly hashing pairs of data until a single hash (the Merkle root) remains. The Merkle root is useful as it allows verification of the leafs (the individual hashes which were repeatedly hashed to create the root), without having to individually verify each leaf.

This is much more efficient than verifying each transaction individually, especially in large systems where there are many in each block. It creates a verifiable relationship between individual pieces of data, and allows for "Merkle proofs" where you can prove a transaction is included in a block by providing just the transaction and the intermediate hashes needed to reconstruct the root (log(n) hashes instead of n transactions).

Merkle Patricia Trie

Merkle Trees work well for Bitcoin's needs, where transactions are static and the primary requirement is proving that a transaction existed within a block. However, they are less ideal for Ethereum's use cases, which require retrieving and updating stored data (for example, account balances and nonces, adding new accounts, updating keys in storage), not just verifying its existence, which is why Ethereum uses the Merkle Patricia Trie to store state.

A Merkle Patricia Trie ("MPT") is a modified Merkle Tree which stores and verifies key-value pairs in a state database. While the MT takes a sequence of data (e.g. transactions) and just hashes them in pairs, MPT organizes data like a dictionary - each piece of data (value) has a specific address (key) where it lives. This key-value storage is achieved through a Patricia Trie.

Ethereum uses different types of keys to access different types of Tries depending on the data that needs to be retrieved. Ethereum uses 4 types of tries:

1. World State Trie: Contains the mapping between addresses and account states.

2. Account Storage Trie: Stores data associated with smart contracts.

3. Transaction Trie: Contains all transactions included in a block.

4. Receipt Trie: Stores transaction receipts with information about transaction execution.

Tries are accessed with different types of keys to access values which allows the chain to perform a variety of functions, including check balances, verify that contract code exists, or look up specific account data.

Note: Ethereum plans on moving away from MPT to Verkle trees, to “upgrade Ethereum nodes so that they can stop storing large amounts of state data without losing the ability to validate blocks”. You can read more about Verkle trees here.

Monad DB: Patricia Trie

Unlike Ethereum, MonadDb implements a Patricia Trie data structure natively, both on-disk and in-memory.

As discussed previously, the MPT is a combination of Merkle Tree data structures with Patricia Trie, which is used for key-value retrieval: where two different data structures are integrated/combined: Patricia Trie is used to store, retrieve and update key-value pairs while the Merkle Tree is used for verification. This leads to extra overhead as it adds an extra layer of complexity with hash-based node references, and Merkle requires additional storage for hashes at each node.

A Patricia Trie based data structure allows MonadDB to:

Have a simpler structure: No Merkle hashes at each node and node relationships without hash references, it only stores keys and values directly.

Direct path compression: Reducing number of lookups needed to reach data.

Native key-value storage: While MPT integrates Patricia Trie into a separate key-value storage system, Patricia Trie has key-value storage as its native functionality which allows for better optimization.

No data structure conversion: There is no need to translate between trie format and database format.

These allow MonadDB to have relatively lower computational overhead, require less storage space, achieve faster operations (for both retrieval and updating), and maintain a simpler implementation.

Asynchronous I/O

Transactions are executed in parallel on Monad. This means that storage needs to accommodate for multiple transactions to access the state in parallel, i.e. there should be asynchronous I/O for the database.

MonadDB supports modern async I/O implementations, which allows it to handle multiple operations without creating lots of threads - unlike other traditional key-value databases (e.g. LMDB) which have to create multiple threads to handle multiple disk operations - leading to less overhead given that there are less threads to manage.

A simple example for input/output processing in the context of crypto is:

Input: reading state to check account balances before transactions

Output: writing/updating account balances after transfers

Asynchronous I/O allows input/output processing (i.e. reading and writing to storage) even if a previous I/O operation has not yet completed. This is necessary for Monad given that multiple transactions are executing in parallel. Therefore, a transaction needs to access the storage to read or write data while another transaction is still reading or writing data from storage. With synchronous I/O programs execute I/O operations one at a time, in sequence. When an I/O operation is requested in synchronous I/O processing, transactions wait until the previous operation is completed. For example:

Synchronous I/O: The chain writes tx/block #1 to state/storage. The chain wait for it to complete. The chain can then write tx/block #2.

Async I/O: The chain writes tx/block #1, tx/block #2 and tx/block #3 to state/storage at the same time. They complete independently.

StateSync

Monad has a StateSync mechanism to help new or lagging nodes catch up to the latest state efficiently without replaying every transaction from genesis. StateSync allows a node (the "client") to request a recent state snapshot up to a target block from its peers (the "servers"). Instead of obtaining the entire state from one peer, the state data is partitioned into chunks (e.g. segments of the account state and recent block headers) which are distributed across multiple validator peers to share the load. Each server responds with the requested chunk of state (leveraging metadata in MonadDb to quickly retrieve the needed trie nodes), and the client assembles these chunks to build the state at the target block. Because the chain is continuously growing, once this sync is done, the node either performs another round of StateSync closer to the tip or replays a small number of recent blocks to fully catch up. This chunked state synchronization greatly accelerates node bootstrap and recovery, ensuring that even as Monad's state grows, new validators can join or reboot and become fully synchronized without hours of delay.

Ecosystem

Ecosystem Efforts

The Monad team is focused on developing a strong and robust ecosystem for their chain. Over the past few years, competition between L1s and L2s has shifted away from being mainly focused on performance, toward being focused on user-facing applications and developer tooling. It is no longer enough for chains to boast high TPS, low latency, and low fees; they must now offer an ecosystem with a variety of different applications, ranging from DePIN to AI, and from DeFi to consumer. The reason that this has become increasingly important is the proliferation of high-performance L1s and low-cost L1s, including Solana, Sui, Aptos, and Hyperliquid, which all offer high-performance, low-cost development environments and blockspace. One advantage Monad has here is that it uses the EVM.

As mentioned previously, Monad offers full EVM bytecode and Ethereum RPC API compatibility, enabling integration for both developers and users without requiring changes to their existing workflows. One criticism often leveled at those working towards scaling the EVM is that more performant alternatives are available, such as the SVM and MoveVM. However, if a team can make software and hardware improvements to maximize EVM performance while maintaining low fees, then scaling the EVM makes sense, since there are existing network effects, developer tooling, and a capital base that can be easily accessed.

Monad's full EVM bytecode compatibility enables app and protocol instances to be ported from other standard EVMs like ETH Mainnet, Arbitrum, and OP Stack without code changes. This compatibility has both advantages and disadvantages. The primary advantage is that existing teams can easily port their applications to Monad. Additionally, developers creating new applications for Monad can leverage the vast resources, infrastructure, and tools developed for the EVM, such as Hardhat, Apeworx, Foundry, wallets like Rabby and Phantom, and analytics and indexing products like Etherscan, Parsec, and Dune.

A disadvantage of easily portable protocols and applications is that they can lead to lazy, low-effort forks and applications being launched on the chain. While it is important for a chain to have many usable products, the majority should be unique applications that cannot be accessed on other chains. For example, although a Uniswap V2-style or concentrated liquidity-based AMM is necessary for most chains, the chain must also attract a new class of protocols and applications that can draw users. Existing EVM tooling and developer resources help enable novel and unique applications. Additionally, the Monad team has implemented various programs, from accelerators to venture funding competitions, to encourage novel protocols and applications for the chain.

Ecosystem Overview

You can find a relatively comprehensive list of applications building on top of Monad in our Monad ecosystem dashboard:

https://data.asxn.xyz/dashboard/monad-ecosystem

Monad offers high throughput and minimal transaction fees, making it ideal for specific types of applications such as CLOBs, DePIN, and consumer apps, that are well-suited to benefit from a high-speed, low-cost environment.

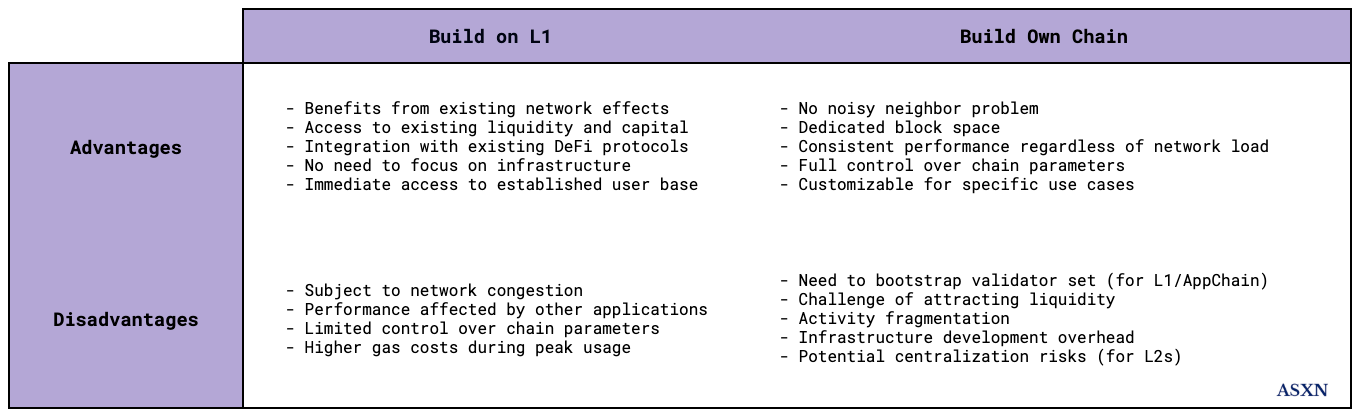

Before diving into the specific categories that are well-suited for Monad, it might be beneficial to understand why an application would choose to launch on an L1 as opposed to launching on an L2 or launching its own L1/L2/app chain.

On the one hand, launching your own L1, L2, or app chain can be beneficial as you don't have to face the noisy neighbor problem. Your block space is fully owned by you, so you can avoid congestion during high activity and maintain consistent performance regardless of overall network load. This is particularly important for CLOBs and consumer applications. During periods of congestion, traders may be unable to execute trades, while everyday users expecting Web2-like performance may find the applications unusable due to slower speeds and degraded performance.

On the other hand, launching your own L1 or app chain requires bootstrapping a validator set and, more importantly, incentivizing users to bridge over liquidity and capital to use your chain. While Hyperliquid has been successful in launching their own L1 and attracting users, there have been many teams who have not been able to do so. Building on a chain allows teams to benefit from network effects, offers secondary and tertiary liquidity effects, and enables them to integrate with other DeFi protocols and applications. It also removes the necessity to focus on infrastructure and building out a stack - which is difficult to do efficiently and effectively. It’s important to note that building out an application or protocol is vastly different to building out an L1 or appchain.

Launching your own L2 can alleviate some of these stresses, specifically those associated with bootstrapping a validator set and technical concerns with building out the infrastructure due to the existence of click-and-deploy rollup-as-a-service providers. However, these L2s are typically not particularly performant (most of them still do not have the TPS to support consumer apps or CLOBs) and tend to have risks associated with centralization (most of them are still Stage 0). Additionally, they still face disadvantages associated with liquidity and activity fragmentation, such as low activity and user operations per second (UOPS).

CLOBs

The fully onchain order book has become the benchmark for the DEX industry. While it hasn't been possible due to limitations and bottlenecks at the network level, the recent proliferation of high-throughput and low-cost environments has meant that onchain CLOBs are now possible. Previously, high gas fees (which make placing orders onchain expensive), network congestion (due to the volume of transactions necessary) and latency issues made fully onchain order book-based trading impractical. In addition, the algorithms used in matching engines for CLOBs consume significant computational resources, making them challenging and expensive to implement onchain.

Fully onchain order book-based models combine the benefits of traditional order books with complete transparency in trade execution and matching. All orders, trades, and the matching engine itself exist on the blockchain, ensuring full visibility at every level of the trading process. This approach offers several key advantages. First, it provides full transparency, as all transactions are recorded onchain, not just trade settlements, allowing for complete auditability.

Second, it mitigates MEV by reducing opportunities for front-running at order placement and cancellation levels, making the system more fair and resistant to manipulation.

Lastly, it eliminates trust assumptions and reduces manipulation risk, as the entire order book and matching process exists onchain, removing the need to trust off-chain operators or protocol insiders and making it much harder for any party to manipulate order matching or execution. In contrast, off-chain order books compromise on these aspects, potentially allowing for front-running by insiders and manipulation by order book operators due to the lack of full transparency in the order placement and matching processes.

Onchain order books offer advantages compared to offchain orderbooks, but they also offer significant advantages over AMMs:

While AMMs often lead to losses for liquidity providers due to LVR and IL, suffer from price slippage, and are vulnerable to arbitrage exploitation of stale prices, onchain orderbooks eliminate exposure to IL or LVR for liquidity providers. Its real-time order matching prevents stale pricing, reduces arbitrage opportunities through efficient price discovery. However, AMMs do hold an advantage for riskier, lower liquidity assets, as they enable permissionless trading and asset listing, enabling price discovery for new and illiquid tokens. It's important to note that, as previously discussed, given the shorter block times on Monad, LVR is less of an issue compared to some alternatives.

CLOBs

Kuru

Twitter: https://x.com/KuruExchange

Kuru is a fully onchain CLOB built on Monad, built around three smart contracts. The system's foundation is the OrderBook contract, which serves as the trading engine and manages the order matching process - all onchain. Users interact with the OrderBook contract to place or cancel orders.

In addition to the OrderBook is the MarginAccount contract, which handles capital management for the system. This contract acts as a custodian for maker funds - before placing orders, market makers deposit their capital here. The OrderBook contract then interacts with the MarginAccount to manage fund transfers as orders are executed or cancelled. While makers use this contract, takers can execute direct swaps without first depositing funds.

The third component is the Router contract, which facilitates trading operations. This contract enables any-to-any swaps across markets and provides pathways for aggregators. It can route between different OrderBook instances for various trading pairs.

Yamata

Twitter: https://x.com/YamataExchange

Yamata is a CLOB-based trading protocol that implements an optimistic hybrid exchange ("OHX") mechanism. The system uses offchain components to improve performance with a guardian node-based architecture to verify validity.

The core architecture consists of two main components: a centralized sequencer that functions as a matching engine for processing trades, and a decentralized Guardian Node Network that provides continuous validation. The Sequencer handles order matching and execution, while Guardian Nodes verify the integrity of these operations.

When users place trades, orders are processed through two simultaneous paths: direct submission to the Sequencer via API for immediate processing, and submission to both the blockchain and IPFS for creating a verifiable record. The Sequencer batches processed orders every 10 seconds into epochs and publishes Merkle tree roots onchain, which Guardian Nodes can verify against IPFS records to ensure proper execution.

The Guardian Node Network acts as a validation layer, operated by guardian nodes who stake non-transferable NFTs. These nodes continuously monitor the Sequencer's operations by comparing published Merkle roots with IPFS order data. When discrepancies are detected, nodes can initiate a challenge process that requires consensus from multiple validators.

Composite Labs

Twitter: https://x.com/CompositeLabs

Composite Labs is building a fully onchain central limit order book (“CLOB”) that integrates spot trading, perpetual futures, and lending/borrowing into a single exchange. The protocol aims to offer high leverage, cross-margining, low fees, and high capital efficiency.

A key component of Composite Labs’ design is its novel portfolio evaluation algorithm. For each token, the protocol sets two parameters: a price volatility value (e.g., 10% for ETH) and a slippage factor (e.g., 2%). To determine a trader’s “risk-adjusted portfolio valuation,” the system simulates how the portfolio would fare at both “current price + volatility” and “current price – volatility.” Whichever yields the lower portfolio value is used as the valuation. The model also factors in slippage whenever positions need to be unwound (for example, buying back borrowed tokens).

Crucially, Composite Labs’ approach correctly offsets a user’s positions. For instance, simply borrowing a token does not inherently mean you’re short; you have to sell those borrowed tokens to establish a short position. Once positions are defined, the protocol aggregates all longs and shorts to calculate net exposure. This provides more capital efficiency than traditional overcollateralized lending models. If the “risk-adjusted” valuation is negative, the account is eligible for liquidation. Otherwise, with enough collateral, traders can safely benefit from higher leverage.

DePIN

Blockchains are inherently well-suited for handling payments due to their censorship-resistant global shared state and fast transaction and settlement times. However, for a chain to support payments and value transfers efficiently, it needs to offer low, predictable fees and rapid finality. As a high-throughput L1 Monad can support emerging use cases, like DePIN applications, which demand not only high payment volumes but also onchain transactions to verify and manage hardware efficiently.

We've seen most DePIN applications launch on Solana historically, for a variety of reasons. Localized fee markets enable Solana to offer low cost transactions despite congestion in other parts of the chain. More importantly, Solana has managed to attract many DePIN applications, as there are many existing DePIN applications on the network. Historically, DePIN apps haven't launched on Ethereum due to slow settlement and high fees. Solana emerged as a low fee high throughput competitor over the past few years as DePIN became increasingly popular - leading to DePIN applications choosing to launch there. As more DePIN applications chose to launch on Solana, a relatively large DePIN developer and application community formed, alongside tool kits and frameworks. This led to more DePIN applications choosing to launch where there already was tech and development resources available for them.

Nevertheless, as an EVM-based high throughput low fees competitor, Monad has the opportunity to capture DePIN mindshare and applications. For this purpose, it will be key for the chain and ecosystem to develop frameworks, tool kits and ecosystem programs to onboard existing and new DePIN applications. While DePIN applications could attempt to build their own networks (either as an L2 or an L1), Monad provides a high-throughput base layer that could enable network effects, composability with other applications, deep liquidity, and robust developer tooling.

DePIN Applications

SkyTrade

Twitter: https://x.com/SkyTradeNetwork

SkyTrade is a DePIN application providing a marketplace for trading and managing rights to airspace. The platform enables property owners to register and tokenize their air rights after completing ownership verification and KYC processes.

These tokenized rights can be traded through SkyTrade's auction house marketplace, where owners can list their rights for auction, receive bids or can enter into rental/leasing arrangements. In addition to an auction mechanic, SkyTrade includes a drone integration system. Through their mobile app, Radar, the platform tracks drone activity and facilitates micropayments from drone operators to air rights owners for permitted routes. This creates a revenue stream for property owners while helping manage airspace usage. In addition, SkyTrade integrates property databases and real estate information sources like Parcl to validate ownership and provide valuations.

As of today, SkyTrade is only live on Solana, where it uses. It uses compressed NFTs (cNFTs) for tokenized air rights. The platform has an ongoing points program.

Social and Consumer

While financial applications have dominated crypto mindshare over the past few years, the last year has seen an increasing focus on consumer and social applications. These applications propose alternative monetization pathways for creators and those looking to leverage their social capital. They also aim to offer censorship resistant, composable, and increasingly financialized versions of social graphs and experiences where users have greater control over their data – or at least derive more benefit from it.

Similarly to DePIN use-cases, social and consumer applications require a chain that can support payments and value transfers efficiently - therefore requiring the base layer to offer low, predictable fees and rapid finality. What is most important here is latency and finality. Since Web 2 experiences are now hyperoptimized, most users expect a similar, low latency experience. A slow experience for payments, shopping and social interactions would frustrate most users. Given that decentralized social media and consumer applications already typically lose out to existing incumbent centralized social media and consumer applications, they need to offer an at par or better experience to capture mindshare and users.

Monad's rapid finality architecture is well suited to offer users a low latency and low cost experience.

Social and Consumer Applications

Kizzy

Twitter: https://x.com/kizzymobile

Kizzy is a social media betting platform focused on creator performance metrics. It allows users to bet on metrics like views and follower counts for content creators.

The platform uses a peer-to-peer betting system where users bet against each other rather than against the house. On Twitter, betting is limited to the first post per day, with reposts, pins, and replies being ineligible for betting and display on creator stats. The betting pool stays open if a creator takes longer than a day to post, remaining active until a new action is detected.

Kizzy uses its own currency called Keso for bets and payouts, which users can obtain by swapping other tokens. The odds in betting pools fluctuate based on user activity until the pool closes, at which point the odds are locked.

The platform currently supports two types of betting events: post games and account games. Post games focus on metrics like views for individual posts, while account games involve betting on follower counts. Betting pools can be refunded if there are disruptions like deleted posts or detected anomalies, but only during pre-market and live states, not after settlement. Users win money by correctly predicting outcomes when other players are incorrect. The winning side in a betting pool receives the losing side's liquidity.

Dusted

Twitter: https://x.com/dusted_app

Dusted is a social platform that provides permissionless, token-gated chat rooms for any tokenized asset. It offers token holders a way to connect and coordinate.

When a user connects their wallet, they automatically gain access to a dedicated chatroom for each token they hold. Community members can access features such as token-tipping for peer-to-peer transactions, user verification through visualized holdings, “trench leaders” to guide community initiatives, and in-app token swaps for easy trading. Dusted supports any token type—from memecoins to NFTs and real-world assets.

Foundational Products

Apart from CLOBs, DePIN and consumer/social applications building on top of Monad, we are also excited for a new generation of foundational apps, such as aggregators and LSDs as well as AI products.

General purpose chains need to have a broad variety of foundational products that work to both attract and keep users on the chain - so that users do not have to go from one chain to another to fulfill their needs. This has been the case so far with L2s. As mentioned previously, users would have to fulfill their options trading or perpetuals needs on Arbitrum, while having to bridge over to Base to engage in social and consumer applications, and then bridge out to Sanko or Xai to partake in games. A general purpose chain's success depends on offering all of these under one single unified state, with low costs and latency and high throughput and speed.

Below are a select, short list of applications to highlight some ecosystem projects being built on top of Monad:

Liquid Staking Applications

We also recently released our Monad liquid staking dashboard for testnet, which you find here:

https://data.asxn.xyz/dashboard/monad-liquid-staking

Stonad - Thunderhead

Twitter: x.com/stonad_

Stonad is a liquid staking protocol on Monad built by Thunderhead. The protocol allows users to stake MON tokens while maintaining liquidity through stMON tokens. Users can stake MON and receive stMON tokens.

Community codes function like an onchain referral links. They allow stMON operators to use a community code which can be passed as a parameter in the transaction that stakes MON with Thunderhead. If a community code is included in this transaction, all the stake from that transaction will be managed by the operator who is assigned that community code.

Similar to StakedHype, Stonad will likely onboard operators based on their alignment with the Monad/Stonad ecosystem, performance metrics, and security practices.

AtlasEVM

Twitter: https://x.com/atlasevm

AtlasEVM is a modular execution abstraction framework for EVM-based chains which transforms how transactions are executed. It introduces a universal auction system where Solvers compete to provide optimal outcomes for user intents or MEV redistribution. Instead of users crafting transactions, they express high-level intents (e.g. “swap X for Y”), and Solvers bid to execute those intents in a single, efficient transaction.

Protocols can integrate Atlas by using its SDK to capture user intents and broadcast them to a network of Solvers. Solvers respond with Solver Operations – proposals that might include finding the best trade route or bundling a profitable arbitrage. An auction mechanism then selects the best proposal, and Atlas’s EntryPoint contract combines the user’s intent and the solver’s solution into one atomic transaction. Users don’t need a special smart wallet – AtlasEVM works with regular accounts, making it backwards-compatible with existing dApps.

AtlasEVM enables user-centric MEV capture, meaning value that would normally be taken by arbitrage bots can instead benefit users or protocols. By running decentralized auctions for transaction execution, it forces competition for solvers which can lead to better prices and outcomes for end-users.

Kintsu

Twitter: https://x.com/Kintsu_xyz

Kintsu is a liquid staking protocol built on Monad that allows users to stake MON tokens while maintaining liquidity for other onchain activities. The protocol works by pooling users' MON tokens and delegating them across network validators, issuing sMON tokens in return that represent a share of the total staked pool.

The core functionality revolves around a Vault contract that handles staking and unstaking operations. When users stake MON, they receive sMON tokens at a conversion rate determined by the current Redemption Ratio. The unstaking process involves a cooldown period enforced by the chain itself, after which users can redeem their MON tokens.

The protocol is designed to be MEV-client agnostic, with MEV rewards being redistributed to sMON holders through stake delegation eventually. In addition, Kintsu plans to implement cross-chain functionality through intent-based bridging.

aPriori

Twitter: https://x.com/apr_labs

aPriori is a liquid staking and MEV infrastructure protocol built natively on Monad. The protocol combines traditional liquid staking mechanics with MEV extraction capabilities, creating an integrated system to maximize validator returns while maintaining network efficiency. At its core, aPriori operates through a dual-layer architecture that manages both staking operations and MEV extraction through a unified validator set.

The protocol's liquid staking mechanism allows MON token holders to stake their assets while maintaining liquidity through derivative tokens. When users deposit MON tokens, they receive a liquid staking derivative in return while their original tokens are staked via aPriori's validator network. These validators participate in both network consensus and MEV opportunities, with extracted value distributed back to stakers as additional yield.

The MEV infrastructure layer implements a specialized auction system designed specifically for Monad's high-performance environment and MonadBFT consensus mechanism. This system organizes MEV extraction through structured auctions that reduce network spam while creating a marketplace for MEV opportunities.

Magma

Twitter: https://x.com/MagmaStaking

Magma is a DAO-owned liquid staking protocol built on Monad that enables token holders to stake and earn rewards while maintaining liquidity through its gMON token. The protocol allows users to stake Monad tokens and receive gMON tokens on a 1:1 basis.